PART 9: The Barbell Deployment

Transform neuropolar stance into strategy. Allocate 80% to judgment and core competencies, 20% to experimentation, 0% to the forbidden middle. Build compounding returns in chaos.

From Stance to Strategy

We’ve established the neuropolar architecture: stable core, adaptive edge, forbidden middle. We’ve grounded it in complexity science (Part 1), shame dynamics (Part 2), ergodicity math (Part 4), and nervous system regulation (Part 8).

Now: what do you actually do?

This part translates architecture into allocation. How to distribute your time, attention, and effort across the barbell structure. What goes in the core. What goes on the edge. Why the middle gets nothing.

The framework is simple. The application requires judgment.

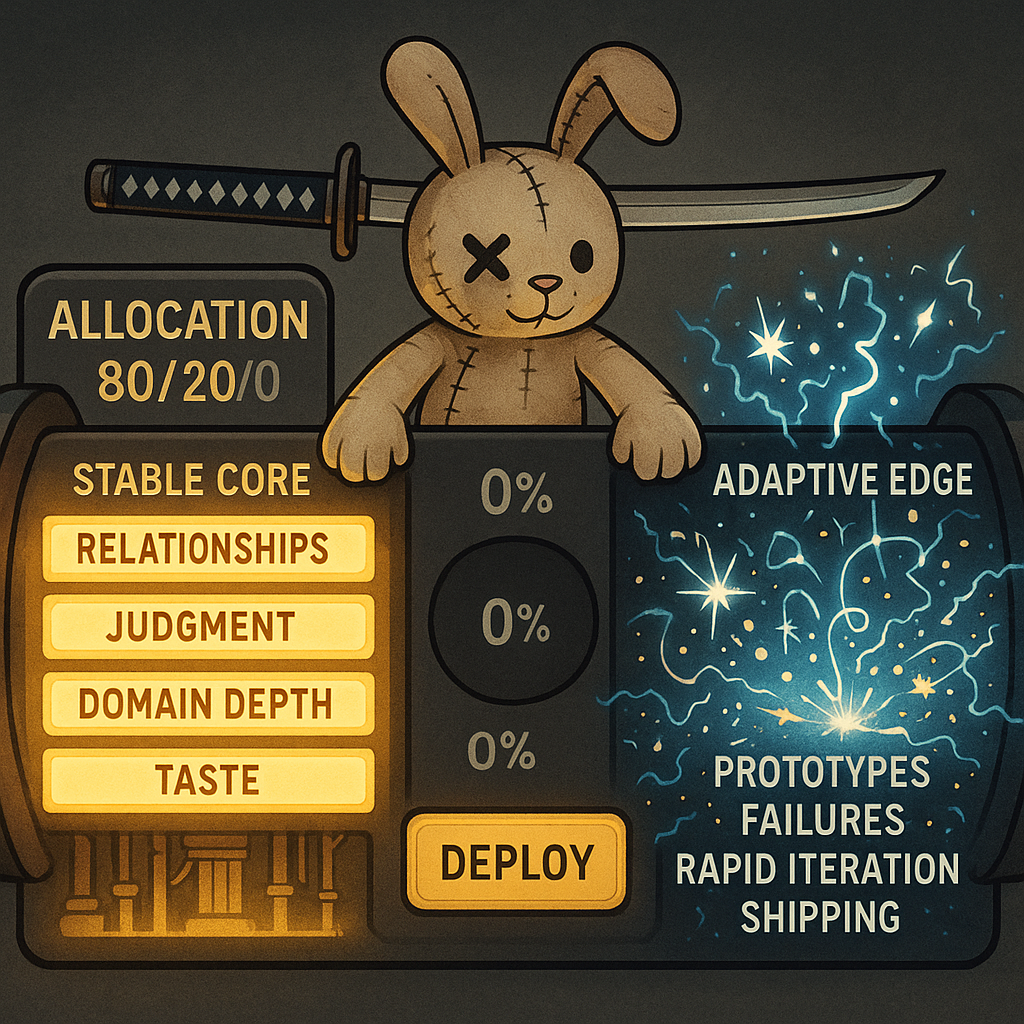

The Allocation

80% to stable core. The things that compound, that survive regime change, that become more valuable as AI capabilities increase. This is the foundation that makes the edge possible.

20% to adaptive edge. Aggressive experimentation, building before ready, learning by doing, shipping and iterating. This is where the compounding gains from Part 4 actually accumulate.

0% to the middle. No careful pilots. No measured adoption. No waiting for best practices. The middle is where you spend resources without capturing either stability or adaptivity.

This is a heuristic, not a law. Your specific ratio depends on your situation—runway, risk tolerance, domain, life stage. But the structure holds: the middle is always zero.

The Stable Core (80%)

The stable core is not “stuff you’re already doing” or “stuff that feels comfortable.” It’s specifically: the things that become more valuable as AI capabilities increase.

Judgment. The capacity to evaluate whether something is good. AI generates options; judgment selects among them. AI produces outputs; judgment determines if they’re true, useful, appropriate. The more AI can generate, the more valuable the ability to discern quality becomes.

Judgment is not automatic. It develops through deliberate practice: evaluating work (yours and others’), articulating why things work or don’t, refining your sense of quality over time. Every evaluation you make is training.

Taste. The subset of judgment that deals with selection and curation. When possibility space is infinite—which AI makes it—taste is what narrows it to the relevant. What to pursue. What to ignore. What’s elegant versus merely functional.

Taste cannot be outsourced. You can ask AI to generate options; you cannot ask AI to have taste on your behalf. Taste is irreducibly yours.

Domain depth. Deep expertise in a specific territory. Not “knowing facts about X” but understanding X well enough to catch errors, evaluate claims, and apply knowledge contextually. AI can be fluent in a domain; it can’t (yet) have the contextual judgment that comes from years of working in the domain.

Domain depth is what makes AI integration valuable rather than generic. A deep domain expert using AI produces different results than a novice using AI. The depth is the leverage.

Relationships. Trust networks that survive technological shifts. People who know you, who you can collaborate with, who will tell you the truth, who will help when you need it. These compound over time and cannot be automated.

Relationships are the stable infrastructure of a career. When everything else shifts, relationships remain. Invest accordingly.

Regulatory capacity. Per Part 8, the nervous system foundation that makes everything else sustainable. If you can’t regulate, you can’t hold the barbell. Regulation is core.

Identity beyond role. Who are you when your current job, title, and activities are removed? Is there a stable “you” that persists? This is the deepest core—the ground beneath the ground.

These six elements—judgment, taste, domain depth, relationships, regulatory capacity, identity—are the stable core. They share a common feature: AI amplifies rather than replaces them.

Allocating 80% of your resources here doesn’t mean doing nothing new. It means: deepening judgment, refining taste, extending domain expertise, investing in relationships, building regulatory capacity, developing stable identity. These are active investments, not passive coasting.

The Adaptive Edge (20%)

The adaptive edge is where you capture the compounding from Part 4. It’s aggressive experimentation with new capabilities, learning by doing, shipping before you’re ready.

Use tools daily. Not “evaluate tools” or “research tools.” Use them for real work. Every day. The compounding requires actual practice, not observation.

Which tools? Start with whatever is most relevant to your domain. For writing, Claude or GPT. For images, Midjourney or DALL-E. For code, Copilot or Cursor. The specific tools matter less than the practice of integration.

Build in public. Share your work, thinking, and process as you develop it. Not polished final products—work in progress. This does several things:

Accelerates feedback loops (you learn from response)

Builds visibility during the competence vacuum (Part 5)

Creates accountability (you’re more likely to continue what you’ve committed to publicly)

Develops voice and perspective through iteration

“But what if it’s not good enough?”

If you’re waiting until it’s “good enough,” you’re in the forbidden middle. Ship before ready. Iterate based on response. The edge is uncomfortable by design.

Embrace failure. On the edge, failure is information. A project that doesn’t work tells you something. A tool that doesn’t fit your workflow reveals your workflow more clearly. The edge is where you fail—repeatedly, quickly, cheaply—and learn from it.

Failure on the edge doesn’t threaten core identity (because identity lives in the stable core, not the edge). This is why building the core is prerequisite: it makes edge failure survivable.

Move fast. In compounding games (Part 4), speed dominates precision. A faster iteration with worse outputs beats a slower iteration with better outputs, because you learn more per unit time. The edge is biased toward action.

This doesn’t mean reckless. It means: action over analysis, shipping over perfecting, learning by doing over learning by studying.

Experiment across modalities. Don’t limit yourself to familiar tool categories. Try things you don’t obviously need. Generate images even if you’re not a visual person. Experiment with code even if you’re not a developer. The edge is about expanding your capability surface.

The Forbidden Middle (0%)

The middle is where you spend resources without capturing the benefits of either pole. It feels responsible. It’s actually the riskiest position.

Careful evaluation periods. “I’m assessing the landscape before committing.” Reasonable-sounding, but actually just delay. The landscape will be different when you’re done assessing than when you started. The evaluation is never “done” in conditions of rapid change.

Measured pilots. “We’ll try this tool for a small use case and see how it goes.” Pilots designed to minimize exposure minimize learning. If you can’t fail meaningfully, you can’t learn meaningfully.

Waiting for best practices. “I’ll adopt when there are established approaches.” Best practices emerge from the early adopters. If you’re waiting for best practices, you’re waiting for other people to do the learning for you. By the time best practices exist, the compounding advantages are captured.

Hedged positioning. “AI is interesting but I’m not ready to commit.” Visible on social media, in professional communications, in self-presentation. Neither the credibility of early adoption nor the distinctiveness of principled refusal. Just… hedge.

Balanced approaches. “I’m taking a balanced view—some AI adoption, some traditional methods.” The balance feels mature. But balance is middle. The barbell isn’t balanced; it’s polarized. Weight at the extremes, nothing in the center.

Each of these middle positions has a rational-sounding justification. Each is a way of preserving optionality while avoiding commitment. And each fails to capture the compounding that comes from actually committing.

Zero allocation to the middle. When you notice yourself drifting there, move to one of the poles.

The Integration

How do the core and edge relate?

Core enables edge. The stable core is what makes edge experimentation sustainable. Strong identity means failed experiments don’t threaten who you are. Regulatory capacity means activation doesn’t trigger collapse. Domain depth means you can evaluate whether the new tools are actually helping. Relationships mean you have support when things are hard.

Without core, the edge becomes chaotic—experiments without grounding, activity without integration, motion without direction.

Edge extends core. The adaptive edge is where the core capabilities meet new tools. Judgment trained on AI-generated outputs. Taste refined in infinite possibility space. Domain depth applied through new interfaces. The edge takes what the core has built and discovers new applications.

Without edge, the core becomes stagnant—preserved but not growing, stable but increasingly obsolete.

The rhythm between them. Neuropolar operation is oscillation, not static allocation. Periods of core deepening (focused skill development, relationship investment, identity work) alternate with periods of edge extension (aggressive experimentation, shipping, learning by doing).

The 80/20 is a rough guide. Some weeks will be 100% core; some will be 50/50 edge. The rhythm matters more than the constant ratio. What matters is: when you’re at core, you’re actually deepening core. When you’re at edge, you’re actually extending edge. And you’re never in the middle.

Practical Deployment

What does this look like in a given week?

Core activities:

Deep work on core competencies (writing, analysis, creation at your current skill level)

Relationship maintenance (conversations, collaboration, support)

Judgment refinement (evaluating work, giving feedback, articulating quality)

Regulatory practice (the protocols from Part 8)

Learning in your domain (reading, studying, developing expertise)

Edge activities:

Daily AI tool usage for real work

Publishing work in progress (not just finished pieces)

Experimenting with unfamiliar tools

Building projects that might fail

Engaging with communities on the frontier

Middle activities (to catch and redirect):

Extended research without action

Drafts that never ship

Evaluation frameworks that delay decision

Social media consumption without contribution

Discussion about AI without using AI

When you notice middle activity, choose a pole. Either redirect to genuine core deepening, or push to actual edge extension. The middle is the default; escaping it requires intention.

The Career Barbell

Zoom out from tactics to overall positioning.

Core career assets:

Reputation in specific domain

Relationships with specific people

Body of work that demonstrates judgment

Skills that compound with AI assistance

Ability to identify what’s worth doing

Edge career bets:

Public building in new capability areas

Projects that might fail visibly

Positioning in emerging spaces

Experimental collaborations

New identity extensions

Middle career positions (avoid):

Generalist positioning (good at many things, distinguished at none)

Waiting for market clarity before committing

Hedged job searches (neither fully committed nor actively exploring)

Professional development that’s neither deep nor experimental

The neuropolar career looks strange from the outside. Core activities look almost boring—deep in a specific domain, focused on judgment and taste, invested in relationships. Edge activities look almost reckless—shipping before ready, building in public, embracing visible failure.

And the middle—the “balanced approach” that most career advice recommends—is absent. Nothing lives there.

The Stakes

The allocation isn’t just about optimization. It’s about survival in non-ergodic conditions (Part 4).

Stable core is ruin prevention. The identity, relationships, and regulatory capacity that persist if experiments fail. The floor you can’t fall through.

Adaptive edge is compounding capture. The experimentation that starts the multiplicative clock ticking. The trajectory that compounds.

Forbidden middle is slow loss. Resources spent without capturing either benefit. The appearance of prudence that is actually the highest-risk position.

You have finite resources—time, attention, energy. Every unit allocated to the middle is a unit not deepening the core or extending the edge. The opportunity cost compounds.

The barbell isn’t an aesthetic choice. It’s the allocation that survives.

This is Part 9 of Neuropolarity, a 10-part series on navigating the AI phase transition.

Previous: Part 8: Nervous System Protocols

Next: Part 10: Navigation Authority — Building credibility in chaos