Dead Internet Theory Was Right (Sort Of)

Part 22 of 25 in the The Philosophy of Future Inevitability series.

Most of the internet is bots talking to bots.

This sounds like conspiracy theory. It's not. It's documented. Measurable. Getting worse.

The conspiracy theory version is wrong about the details but right about the trajectory. We're approaching the bot horizon.

The Numbers

Estimates vary. Some say 40% of internet traffic is bots. Others say 60%. Some specific platforms, higher.

Not all bots are malicious. Many are search engines, monitoring services, legitimate automation. But a growing fraction is designed to seem human. To engage. To influence.

And that fraction is about to explode.

Let's be specific. A 2023 Imperva study found 47.4% of all internet traffic was bots. Of that, roughly half was "bad bots"—designed to scrape, spam, manipulate, or impersonate.

Twitter, before Elon's takeover, estimated 5% fake accounts. External researchers estimated 15-20%. The company's internal documents, revealed during litigation, suggested they didn't actually know. The detection methods were inadequate.

Instagram fake engagement is documented. You can buy 1,000 likes for $10. Those likes come from bot accounts that seem real—profile photos, posts, followers. Good enough to fool casual inspection.

YouTube comment sections on popular videos are often 30-50% bots. Some pushing scams. Some just farming engagement for account aging. The bots reply to each other. Whole conversations that are entirely artificial.

This is pre-LLM data. Before AI could write convincing comments. Before image generation made profile photos trivial. Before the cost of seeming human dropped to near-zero.

Now run that scenario with GPT-4 level language capability. With Midjourney quality profile images. With automation that can maintain consistent personas across months.

The fraction doesn't just increase. It explodes.

Dead Internet Theory

The theory, as originally stated: the internet "died" around 2016-2017, and most content is now AI-generated or bot-distributed. The feeling of authentic human connection online is manufactured.

The original theory was paranoid, premature, conspiratorial.

It's also becoming true.

Not in the way originally theorized—shadowy forces replacing humans with bots. But in the emergent way—everyone adopting AI tools until the aggregate effect is bot-dominated content.

The mechanism is banal. The outcome is the same.

The Generative Flood

GPT-3 released in 2020. GPT-4 in 2023. Each generation more capable, more accessible.

Content that used to require humans became easy to generate. Blog posts. Comments. Reviews. Social media posts. Product descriptions. News articles.

Not all of it is spam. Some is legitimate use—businesses using AI for efficiency. But some is pure manipulation. And the ratio is shifting.

When generating content is nearly free, content explodes. Most of that explosion isn't human.

The economics changed fundamentally. Pre-AI, generating a blog post required a human. Cost: $50-500 depending on quality and length. This limited spam. You could only spam if the spam was profitable enough to cover costs.

Post-AI, generating a blog post costs cents in API calls. Maybe dollars if you want high quality. The cost constraint disappeared.

Result: AI-generated content farms. Sites that publish hundreds of articles daily. All AI-written. All SEO-optimized. All designed to rank in search and show ads.

Google detects some of this. Downgrades obviously AI-generated spam. But the arms race is on. AI content gets better. Detection gets harder. And some AI content is legitimately useful, so you can't just filter all of it.

Amazon's Kindle store is flooded with AI-generated books. Self-help. Romance. Children's books. Some are obvious—weird phrasing, inconsistent plots. Others are good enough to sell.

The same dynamic hits every platform. Reddit comments. Medium articles. Substack posts. Anywhere text appears, AI-generated text appears.

And it's not just volume. It's personalization. AI can generate content targeted to specific demographics, specific keywords, specific conversation threads. Spam that adapts. Manipulation that learns.

The flood is only beginning.

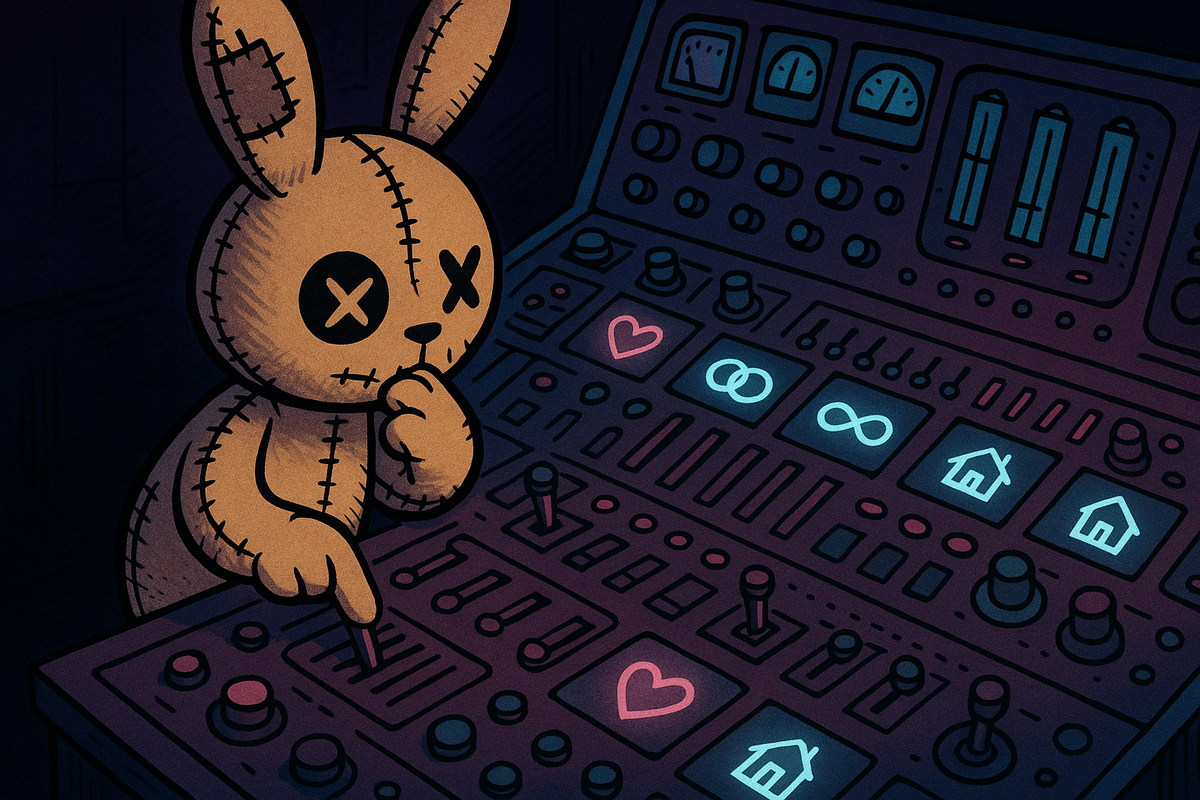

The Engagement Bots

Social media runs on engagement. Likes. Comments. Shares. These metrics determine what gets seen.

Bots can generate engagement. Have always been able to. But now they can generate convincing engagement. Comments that seem thoughtful. Discussions that seem real. Personas that seem human.

The 73% who can't tell AI from human in conversation? They can't tell bot comments from human comments either.

The feeds are filling with artificial engagement, boosting artificial content, creating an artificial sense of consensus.

The Astroturf Problem

Astroturfing—fake grassroots campaigns—has always existed.

It used to be expensive. You needed humans to post, to comment, to maintain personas. This limited scale.

Now it's cheap. One operator can run thousands of convincing personas. Can simulate grassroots movements. Can create the appearance of organic support for anything.

Political campaigns use this. Corporations use this. State actors use this.

You can't tell what's real public opinion anymore. Maybe nothing you've seen online is real public opinion.

The 2016 Russian interference campaign used humans. Troll farms. People paid to post divisive content, pretend to be Americans, amplify controversies. It was effective but limited by human labor costs.

The 2024 campaigns—and we know they existed even if specifics are classified—used AI. One operator running 10,000 personas. Each persona with generated profile photos, consistent posting histories, realistic interaction patterns. Each targeting specific demographics in specific swing districts.

Cost of 2016 campaign: tens of millions in labor. Cost of 2024 equivalent: hundreds of thousands in API calls and infrastructure. 100x cost reduction. Proportional scale increase.

This isn't hypothetical. The tools exist. You can rent them. "Social media management platforms" that are transparently astroturfing services. They offer "engagement packages"—which means bot networks. They promise "authentic-seeming interactions"—which means AI-generated content.

Corporate reputation management uses this. A negative review appears. The service deploys 50 positive reviews. All AI-generated. All with realistic user profiles. The negative review gets buried.

Political campaigns use this. A candidate's position is unpopular. The service creates the appearance of grassroots support. Hundreds of "real people" posting in favor. Turns out none of them are real.

Product launches use this. Company wants buzz. Service generates thousands of excited social media posts. Product trends. Seems like organic excitement. Isn't.

The line between marketing and manipulation disappeared. Or rather: marketing became manipulation, at scale, with AI.

The Information Collapse

This destroys the information ecosystem.

Reviews become useless. Fake reviews are indistinguishable from real ones. Amazon, Yelp, Google—flooded with AI-generated five-star and one-star reviews, depending on who's paying.

News becomes suspect. AI can generate plausible articles. Some sites are entirely AI-generated, designed to look like news sources. They rank in search. They spread on social.

Expertise becomes noise. For any claim, AI can generate convincing counter-claims, supporting "evidence," expert-seeming opinions. Asymmetric costs—it's cheap to pollute, expensive to verify.

The cost of generating bullshit is approaching zero. The cost of detecting bullshit is not.

This is the core problem: asymmetric warfare on information.

Generating a fake review: 5 seconds with ChatGPT. Cost: fractions of a cent.

Detecting a fake review: Human review, pattern analysis, account history checking. Cost: dollars per review.

Generating a fake news article: Minutes with AI. Plausible sources, realistic quotes, convincing framing. Cost: cents.

Verifying a news article: Contact sources, check facts, investigate claims. Cost: hours of journalist time.

Generating fake scientific-sounding evidence: AI can cite real papers, misrepresent their findings, create plausible but false interpretations. Cost: trivial.

Debunking fake scientific claims: Actual expertise. Reading the papers. Understanding the methods. Cost: expert time, which is expensive and scarce.

The attacker has 1000x cost advantage. This is unsustainable. You can't manually verify everything when pollution is free.

The result: trust collapses. You can't trust reviews—too many are fake. Can't trust news—too much is generated. Can't trust social media consensus—too much is astroturfed. Can't trust search results—too many are SEO-gamed AI content.

What's left? Direct relationships. Verified sources. Personal networks. The open internet becomes unusable for information. The trusted sources become walled gardens—expensive, exclusive, gatekept.

Information democracy dies. Not because of censorship. Because of pollution. The commons gets poisoned until only private sources remain trustworthy.

The Bot Horizon

A horizon is a point beyond which you can't see.

The bot horizon is the point at which you can't distinguish bot from human reliably. Can't tell which content is generated. Can't tell which interactions are authentic.

We're approaching it. Might have passed it already.

Beyond the horizon, the social internet changes fundamentally. Trust collapses. Verification becomes necessary for every interaction. The default assumption flips from "probably human" to "probably not."

What Survives

Some things survive the bot horizon:

Closed networks. Private groups. Vetted communities. Places where membership is verified.

High-bandwidth communication. Video, voice, in-person. Bots can fake these too, but it's harder. For now.

Reputation systems. Long histories. Consistent identities. Expensive to create, cheap to verify.

Proof of humanity. New mechanisms—whatever they turn out to be—that demonstrate human origin.

The open, anonymous internet we knew is dying. What replaces it is smaller, more verified, less free.

Practically, this means:

Premium verification services. Companies that verify human identity become valuable. Not just "verify you're real"—verify you're consistently the same real person over time. This becomes the new credential.

Invite-only communities. The valuable discussions move to Discord servers, Slack groups, private forums where membership is vetted. The open web becomes the spam web. The closed web becomes the real web.

Expensive proof of work. Some systems might require costly actions that bots won't pay for. Cryptocurrency-style proof of human activity. If commenting costs money—even small amounts—bots become uneconomical.

Biometric authentication. Video verification. Voice verification. Eventually, continuous authentication that proves ongoing human presence. Invasive but potentially necessary.

Reputation staking. Long-established accounts with consistent behavior become valuable. New accounts are assumed fake. This makes the internet less accessible to newcomers but more resistant to bot pollution.

Local networks. Some communities go full physical. If you can't verify someone online, meet them in person. Internet becomes discovery layer; trust requires meatspace.

None of these are complete solutions. All have costs. All reduce the openness that made the internet valuable. But the alternative—trusting nothing online—is worse.

The architecture changes. The old model: open, anonymous, free. The new model: closed, verified, costly. We lose something important. But maybe we already lost it.

The Conspiracy Gets Real

Here's the thing about dead internet theory:

It was wrong as a conspiracy. There was no moment of death. No shadowy group killing the authentic internet.

It's becoming right as an observation. The authentic human internet is dying. Being diluted by generated content until it's a minority of what exists.

Not through conspiracy. Through incentives. Through tools. Through the economics of attention.

The conspiracy theorists were crazy. They were also correct about where we'd end up.

Living Post-Horizon

Assume the worst. Most of what you see online is not what it seems.

Build verification habits. Cross-reference. Check sources. Be skeptical of consensus that forms too quickly.

Prioritize high-trust relationships. The connections you've verified. The communities you've vetted.

Accept the loss. The open internet of the 2000s and 2010s is ending. What we get next is different. Maybe better in some ways. Certainly worse in others.

The bots won. They always were going to.

The question is what we do now.

Practical survival strategies:

Default to skepticism. The prior probability that any given account, comment, review, or article is bot-generated is high and rising. Start from distrust. Require evidence of humanity.

Value in-person connections. The people you've met in physical space are provably real. Digital-only relationships are increasingly suspect. This is regressive but necessary.

Build trust networks. Small groups of verified humans who vouch for each other. Renaissance of the "web of trust" concept. Your network becomes your filter.

Ignore metrics. Follower counts are fake. Like counts are fake. View counts are fake. Engagement metrics are thoroughly gamed. Judge content by content, not by apparent popularity.

Pay for quality. Free content is increasingly bot-generated. Paywalls and subscriptions become quality signals. Not perfect—but better than the open web.

Diversify information sources. Don't trust any single platform. Cross-reference. If something seems real, verify it multiple ways. The days of taking anything at face value are over.

Teach media literacy. The next generation needs to grow up assuming deception. Not paranoid—realistic. The internet is full of artificial agents pursuing various agendas. This is just the environment.

Accept imperfection. You will be fooled. Some bots will seem human. Some astroturf will seem organic. The goal isn't perfect detection. The goal is reducing susceptibility.

This is exhausting. It's work. It makes the internet less fun, less free, less useful. But it's adapting to reality. The dead internet theory isn't theory anymore. It's observation.

We're living in it.

Previous: China Chips and the Refinery Wars Next: The Sovereign Individual Was Right (Eventually)