AI Slop Is People Slop in Technicolor

Part 13 of 25 in the The Philosophy of Future Inevitability series.

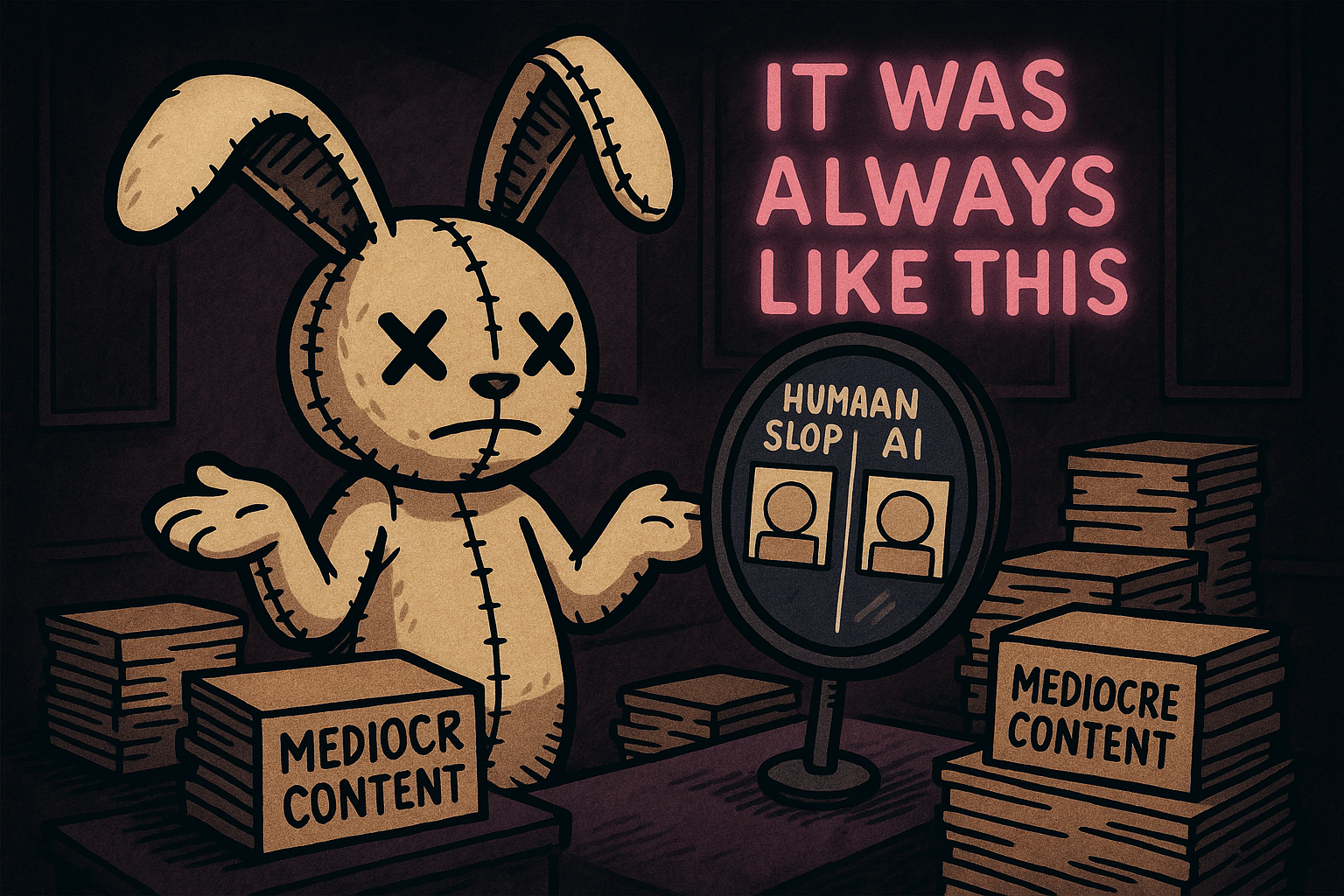

Everyone's worried about AI slop.

The generic blog posts. The repetitive LinkedIn insights. The content that says nothing while appearing to say something. The infinite mediocrity flooding every platform.

Here's the thing: this isn't new.

AI slop is just people slop, scaled up and made visible. The mediocrity was always there. Now it's generated faster and in higher volume.

The Pre-AI Baseline

Before AI, most content was mediocre.

Most blog posts said nothing new. Most LinkedIn posts were generic platitudes. Most business writing was padding around minimal insight.

We didn't notice because the volume was lower. Because we attributed to effort what was actually just output. Because we assumed a human wrote it, so it must contain human thought.

It usually didn't.

The median was always mediocre. AI just revealed what median means.

Go back and read the internet circa 2015. Before GPT. Before mass AI-generated content. The quality was already bad.

Most blog posts were SEO-optimized content farms. "10 Tips for Better Productivity." "How to Lose Weight Fast." "The Secret to Success." Generic advice, recycled endlessly, adding no new information to the world. Written by humans, but barely.

Most LinkedIn posts were engagement bait. "Agree?" posts. Humble brags. Platitudes about leadership and growth mindset. Written to generate likes, not to communicate ideas.

Most marketing copy was templates filled in with specifics. Change the product name and industry, keep the structure. "Are you tired of [problem]? [Product] is the solution. Our innovative approach to [thing] will transform your [outcome]."

This was the baseline. Human-generated, but empty. The effort was there—someone typed the words. The thought was not.

We tolerated this because we assumed the effort implied something. If a human spent time writing it, there must be value there somewhere. We gave the benefit of the doubt.

AI removes the effort. The same generic content gets produced in seconds. And suddenly we see it for what it always was: empty.

The content didn't get worse. The excuse got removed. We can't pretend effort equals value anymore when there's no effort.

The LinkedIn NPC

You've seen this person.

"Hard work pays off. Here's what I learned from failure. Be authentic. Surround yourself with good people. Success is a journey, not a destination."

This is NPC dialogue. Non-Player Character. The script that loops infinitely, meaning nothing, adding nothing.

This existed before GPT. This was always the median.

The person posting this wasn't thinking. They were performing "thought leader content" without having thoughts. They were producing content-shaped objects.

AI can produce this instantly because there's nothing to it. The pattern is trivial. Generic insight template plus generic topic equals LinkedIn post.

AI slop and people slop are indistinguishable because they're the same thing.

The Visibility Problem

AI made the human condition visible.

Before: you see generic content and assume a human produced it through effort. The effort implies something. The humanity implies meaning.

After: you see generic content and know a machine could have produced it in seconds. The ease reveals the emptiness. The machine capability exposes the human lack.

Nothing changed about the content. What changed is you can't unsee how empty it was.

The emperor was always naked. AI is the child pointing.

The Taste Distribution

Here's the uncomfortable truth:

Most people have median taste. They don't notice when content is generic because they don't have a strong sense of what good content would be.

This isn't an insult. Taste is developed through exposure and cultivation. Most people haven't had the exposure. Most people haven't cultivated.

The people who notice slop have developed taste. They see the emptiness. They recognize the patterns. They feel the lack.

But they're the minority. The median person doesn't notice median quality. The slop looks fine to them.

Taste is a skill. It requires training your discrimination. Reading enough good writing that you recognize bad writing. Seeing enough quality that you notice the absence of quality.

Most people haven't done this training. Not because they're deficient—because it's not required for most of life. You can be successful without developed taste. You can navigate most domains fine with median taste.

But this means most people can't distinguish slop from substance. The AI-generated LinkedIn post looks the same as the human-generated LinkedIn post because both are empty in the same way. The pattern-matched insight sounds like the real insight because the real insight was already pretty pattern-matched.

The person with developed taste sees the difference instantly. The sentence rhythms are off. The examples are too generic. The transitions are too smooth. The claims are too hedged. The whole thing feels like a template filled in, because it is.

The person without developed taste doesn't see this. They read the post, it makes sense, it's formatted well, they scroll on. They can't tell it was AI-generated because they can't tell it's slop, and they can't tell it's slop because they've been consuming slop all along.

This creates a weird bifurcation. The people with taste are increasingly alienated by the platforms. Everything feels fake, generated, empty. The signal-to-noise ratio is collapsing.

The people without taste are fine. The content looks the same as it always did. They're not experiencing a degradation because they weren't perceiving the quality that's being lost.

This isn't symmetrical. The people with taste can't become content with slop. Once you see it, you can't unsee it. The people without taste could develop it, but they'd have to invest significant effort and they don't know they're missing anything.

The Acceleration

AI accelerates slop production. What took a human hours takes AI seconds.

The platforms that run on content volume get flooded. SEO gets gamed faster. Social media fills with AI-generated posts that are indistinguishable from human-generated posts—because both are empty.

The volume increases. The median quality stays the same—it was already at the floor. The proportion of quality content decreases because the denominator exploded.

Finding the good stuff becomes harder. Signal drowns in noise. The platforms become less useful.

The Mimicry Problem

AI is very good at mimicking the patterns of thought without containing thought.

"Let's unpack this." "The key insight here is..." "What most people don't realize..."

These phrases pattern-match to intelligent discussion. They signal that thinking is happening. They're the costume of insight.

The costume was always available to humans too. Many humans wore it. The thought leader who talks a lot without saying anything. The consultant who produces impressive presentations with no actionable content. The writer who sounds smart but isn't.

AI learned from this. AI is a mirror. It reflects what's in the training data. The training data was full of costume-wearing.

Here's the mechanism: certain phrases signal "this is intelligent analysis" without requiring actual intelligence. They're social grooming behaviors translated to text. They create the impression of depth.

Human bullshitters have always used these. The business consultant who fills slides with buzzwords. The academic who uses jargon to obscure the absence of ideas. The motivational speaker who strings together platitudes.

These people were successful because the costume worked. Most audiences couldn't distinguish costume from substance. The performance of intelligence was good enough.

AI perfected the performance. It can generate the costume flawlessly. The right phrases in the right order. The appropriate hedging. The gestures toward nuance. The surface-level synthesis of opposing views.

The result reads as intelligent. It has the markers. It follows the patterns. But there's no comprehension behind it. No actual thinking. Just extremely sophisticated pattern matching.

This was always true of some human output. The median business book is template-driven. Change the industry and examples, keep the structure. "Innovate or die. Here are five companies that innovated. Here are five that didn't. The difference: culture. Here's how to build innovation culture." Repeat for 250 pages.

That's a human-written template. AI can execute it perfectly. The AI version is indistinguishable from the human version because both are empty in the same way.

The uncomfortable implication: a lot of human intellectual work was already template execution. We didn't notice because we assumed the effort implied thought. AI reveals that the effort was often mechanical. The thinking was already mostly absent.

This doesn't mean all human thought is mechanical. Genuine insight exists. Genuine synthesis exists. Genuine novel thinking exists. But it was always rarer than we pretended. The median was always costume-wearing.

AI just made the costume cheaper to produce. And in doing so, revealed how much of human output was already costume.

The Irony

The people complaining loudest about AI slop are often producers of people slop.

The LinkedIn influencer upset that AI can produce their content in seconds. The business writer alarmed that their articles are indistinguishable from generated text. The thought leader threatened by the thought-leader-simulation.

If a machine can do your job in seconds, maybe your job wasn't adding what you thought it was adding.

This is harsh. It's also true.

The people producing genuinely novel, genuinely thoughtful work aren't threatened by AI slop. Their work is distinguishable. The people threatened are the people whose work was slop all along.

Watch who's panicking. It's not the researchers producing original findings. It's not the writers with distinctive voice and genuine insight. It's not the people doing work that requires deep understanding of specific domains.

It's the people whose job was always template execution. The content marketer who wrote generic blog posts. The business analyst who filled decks with obvious observations. The thought leader who recycled the same frameworks with new branding.

These people are panicking because AI can do their job—not because AI got smart, but because their job was never that hard. It just looked hard because it took time. The time created the illusion of value.

AI removed the time. And with the time gone, the lack of value became obvious.

This is uncomfortable to recognize if you're one of these people. Your identity was built on being good at something. Your income depended on that something. Your status came from expertise in that domain.

Now you learn the domain was template execution. You weren't adding novel value. You were pattern matching in a way that AI can replicate. Your expertise was superficial.

This isn't universal. Plenty of people do real work that AI can't replicate. But the median content producer, the median business writer, the median thought leader—they were always doing slop work. They just didn't know it because everyone else was also doing slop work and calling it expertise.

The harsh reality: if your reaction to AI is "it's producing slop," look at your own output. Is it distinguishable? Is it genuinely novel? Does it require insight that goes beyond pattern matching?

If not, you weren't producing quality. You were producing slop. AI just made yours obsolete.

What This Means

Lower your estimation of pre-AI content. It wasn't as thoughtful as you assumed. The mediocrity was already there; you just couldn't see it.

Develop taste. The only defense against slop is being able to recognize it. This requires exposure to quality and deliberate cultivation.

Expect the platforms to degrade. The volume of slop will increase. The signal-to-noise ratio will worsen. The discovery problem will intensify.

Produce quality if you want to matter. The only content that stands out from infinite slop is genuinely good content. Mediocrity is now infinite. Excellence remains scarce.

The Reveal

AI slop is people slop in technicolor.

Brighter. Faster. Higher volume. But the same emptiness.

The machine held up a mirror. We didn't like what we saw. But the reflection was accurate.

Most human output was always slop. AI just made it undeniable.

Now we see.

Previous: Low Agreeable Plus High Open: The AI Superpower Combo Next: Non-Clinical AI Psychosis: Your Friend Who Solved Their Life