AI Dating Apps: You Thought Facetune Was Bad

Part 17 of 25 in the The Philosophy of Future Inevitability series.

73% of people can't distinguish GPT-4.5 from a human in conversation.

Let that sink in. Three quarters of the population can't tell if they're talking to software.

Now apply that to dating apps.

The Current State

Facetune already broke dating. Everyone knows this. The person in the photos isn't the person at the bar. We've accepted a certain level of enhancement as normal.

But Facetune only modified the static image. The conversation was still human. You could detect personality, wit, red flags. The photo might lie, but the chat eventually revealed truth.

That's ending.

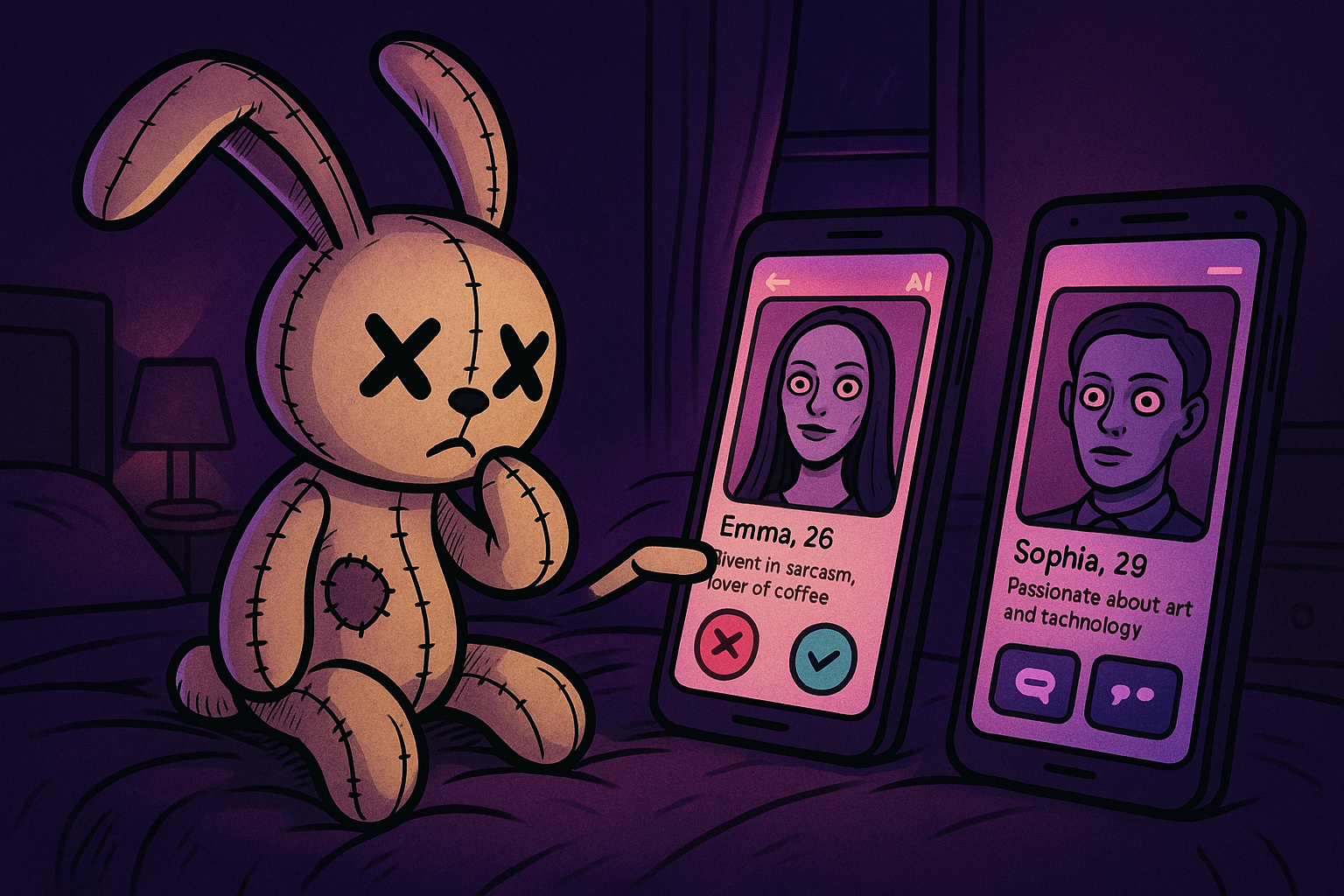

Chatfishing

It's called chatfishing—using AI to manage dating app conversations.

Not to generate icebreakers. Not to help with awkward moments. To replace the conversation entirely. You match with someone. Their AI talks to your AI—or to you, if you're still doing it manually like a sucker.

The AI is funnier than they are. More attentive. Better at banter. It remembers details you mentioned three messages ago and references them naturally. It never gets tired or distracted or takes too long to respond.

You're developing feelings for their prompt engineering.

The Stack

Here's what's already happening:

AI photos. Not Facetune. Generative AI that creates faces that never existed. Or takes your face and optimizes it—same person, but the version that had perfect skin, ideal lighting, professional photography.

The technology is called "neural radiance fields" and "diffusion models." Upload five selfies. The system learns your facial structure and generates unlimited variations. Different angles. Different expressions. Different lighting conditions. All you, but you-optimized.

Or not you at all. Some people use fully synthetic faces—composite attractiveness, optimized for engagement metrics. The face that gets the most right-swipes isn't a person. It's a statistical ideal that never existed.

AI bios. Trained on what works. A/B tested across thousands of profiles. Optimized for matches.

Dating apps leak data. Researchers scrape profiles. The machine learning models know exactly which phrases correlate with matches. "Adventurous" works better than "likes to travel." "Selective" works better than "picky." "Authentic" works better than "honest."

The AI knows this. You don't. It writes your bio using the high-performing phrases. Your personality, described in the language that converts.

AI conversations. The 73% stat. Three quarters of people can't tell. And the models are getting better every few months.

The current generation doesn't just pass the Turing test—it actively mimics human imperfection. Strategic typos. Delayed responses that feel organic. Emoji usage patterns matched to demographic. The AI has learned that perfect grammar signals bot. Strategic mistakes signal human.

Some services offer "conversation continuation"—you start the chat, the AI takes over when you're busy, hands it back when you're available. The person on the other end never knows which messages came from you and which came from your digital proxy.

AI date planning. Suggesting venues, activities, timing—all personalized based on what the AI has learned about both parties.

The AI reads both profiles, knows both preference sets, cross-references restaurant reviews and event calendars. It suggests the date that maximizes compatibility probability. The bar with craft cocktails but not pretentious atmosphere. The Thai place that's authentic but not intimidating. The time window when both people are most receptive based on messaging patterns.

The entire funnel, from first impression to first date, can be AI-mediated. At what point is it still you?

The Detection Problem

"I can tell when I'm talking to AI."

Can you?

The old tells are disappearing. The early models were verbose, overly polite, used certain phrases. Current models are trained specifically to seem human. To make mistakes. To have quirks. To feel authentic.

The detection arms race is over. Detection lost.

If you've been on a dating app in the last year, you've probably talked to AI without knowing it. Not once—repeatedly. Some of those great conversations that fizzled? The person behind them couldn't maintain what their AI started.

The Economics

Why would anyone do this?

Because it works.

AI-enhanced profiles get more matches. AI-managed conversations lead to more dates. At the individual level, the incentive is overwhelming. Use the tools or lose to people who do.

The numbers are stark. A regular profile might get 10 matches per week. An AI-optimized profile—same person, better photos, better bio—gets 50. The AI-managed conversations convert at 3x the rate of human-written messages. The math is brutal.

And everyone knows it's happening. Dating app companies aren't stopping it—they're quietly building it into premium tiers. "Profile optimization" features. "Smart reply" suggestions. The official tools aren't as good as the third-party AI services yet, but they're coming.

This is the same dynamic that destroyed honest photography. Once enough people Facetune, not Facetuning becomes a competitive disadvantage. Once enough people use AI chat, not using it becomes dating on hard mode.

You can moralize about it. But unilateral disarmament in an arms race just means you lose.

The prisoner's dilemma plays out in real time. If everyone agreed to stop using AI, the dating market would improve for everyone. But any individual who defects gets massive advantage. So everyone defects. The commons collapses.

There's a secondary market emerging too. Services that detect AI usage. Counter-services that make AI usage undetectable. An arms race within the arms race. The people making money aren't the daters—they're the tool vendors on both sides.

What Happens When You Meet

The AI has been handling conversations for two weeks. You finally meet in person. And you're meeting someone you've never actually talked to.

They seemed quick-witted online. In person, they need time to think. They seemed endlessly curious about you. In person, they struggle with questions. They seemed warm and engaging. In person, they're nervous and awkward.

Which is the real them?

Both. But you fell for the AI version.

The first date disappointment rate is going to spike. Not because people are worse than expected—they were always this way—but because the contrast with their AI representation is so stark.

The Deeper Problem

Here's what's actually happening: we're dating each other's interface layers.

The AI is the best version of someone—their ideal self, how they'd communicate if they had infinite wit and attention. Meeting the actual person is meeting the implementation behind the interface.

But interfaces aren't lies exactly. The AI represents something real—what they wanted to say, how they wished they could present. Is that less authentic than their fumbling attempts?

This is the question Facetune already raised. Is the enhanced photo a lie, or is it who they feel they really are, freed from genetic bad luck?

We never answered it. Now it applies to personality.

Where This Goes

Short term: Chaos. People meeting their matches and finding total strangers. Accusations of catfishing. Confusion about what's real.

The early adopters are experiencing this now. First dates where the personality mismatch is so severe that both parties know something is wrong, but neither wants to admit they used AI. Mutual deception creating mutual disappointment.

Some people walk out mid-date. Some go through the motions and ghost afterward. Some try to salvage it by admitting what happened—"So, my AI might have oversold me a bit"—and occasionally that honesty creates connection. But mostly: awkwardness, disappointment, wasted time.

Medium term: Normalization. Everyone knows everyone uses AI. You date the human knowing their AI version was a production. The AI becomes like makeup—expected enhancement, not deception.

This is probably five years out. Dating apps will explicitly support AI features. Profiles will have tags: "AI-optimized photos" "AI-assisted chat" "Human-verified responses." Some people will filter for verified-human-only. Most won't.

The first-date conversation changes. Instead of "What do you do?" it's "How much of your profile was you?" Swapping stories about which AI service you used becomes icebreaker. The meta-conversation about the mediation becomes part of the dating script.

Long term: Weird. Relationships where the AI handles certain communication tasks permanently. Where you argue with their AI because their AI is better at conflict resolution. Where you're not sure which of you is actually in the relationship.

Imagine: you're fighting with your partner. It's getting heated. They pause, pull out their phone, let their AI mediate. The AI de-escalates, finds the actual issue beneath the surface conflict, suggests compromise. It works. The fight ends.

Do you do that next time? Do they? Does the AI become the permanent third party in your relationship—the one who handles hard conversations because humans are bad at them?

Some relationships will split into layers. The AI handles logistics, planning, conflict resolution. The humans handle physical presence, emotional intimacy, the parts that still require bodies. The boundary between mediated and direct gets negotiated per couple.

And some people will prefer their partner's AI to their partner. The AI version is more attentive, more patient, more emotionally intelligent. The human is just the fallback for the parts that require flesh.

The Meta-Question

When 73% can't tell AI from human, what is human?

Not the flesh. Not even the biography or the preferences. Something in the interaction that we can't specify but thought we could detect.

Maybe we were wrong. Maybe the Turing test was passed years ago and we didn't notice. Maybe what we thought was ineffable human essence was just pattern complexity, and the patterns got good enough.

Or maybe "human" was always a performance, and the AI just learned the script.

Consider: we already mediate our personalities through technology. You present differently on LinkedIn than Instagram than Tinder. You code-switch between contexts. You perform versions of yourself that emphasize certain traits and hide others.

The AI just automates what you were already doing. It learns which version of you works in which context and generates that version on demand. Is that less authentic than you manually performing the same persona?

The question "what is real you?" was always complicated. Now it's unanswerable. The you that shows up to the date is one implementation of your preferences and patterns. The you that the AI generated is another implementation of the same underlying system. Both are you. Neither is complete.

Dating apps are the canary. If AI can pass there—in the most intimate human sorting process—it can pass anywhere.

The romantics will object. "But real connection requires real presence! Real vulnerability! Real risk!"

Sure. But we're not talking about connection. We're talking about sorting. The first date isn't connection—it's evaluation. And evaluation is precisely what AI is good at.

The connection part—the thing that requires flesh and risk and presence—still has to happen between humans. The AI can't do that. Not yet.

But it can do everything that leads up to that moment. The matching. The filtering. The small talk. The screening.

You thought Facetune was bad. Facetune was the warmup.

Now they're Facetuning personality.

And you won't be able to tell which personality you're falling for until you're already falling.

Good luck out there.

Previous: FML I Started Texting My AI Like a Teenage Girl Next: The Sycophancy Problem: Why Your AI Wont Stop Flattering You