Delve: How AI Broke the English Language

Part 19 of 25 in the The Philosophy of Future Inevitability series.

"Let's delve into this topic."

Nobody talks like that. Nobody has ever talked like that. The word "delve" appeared in approximately zero percent of human writing before 2023.

Now it's everywhere. Because AI loves to delve.

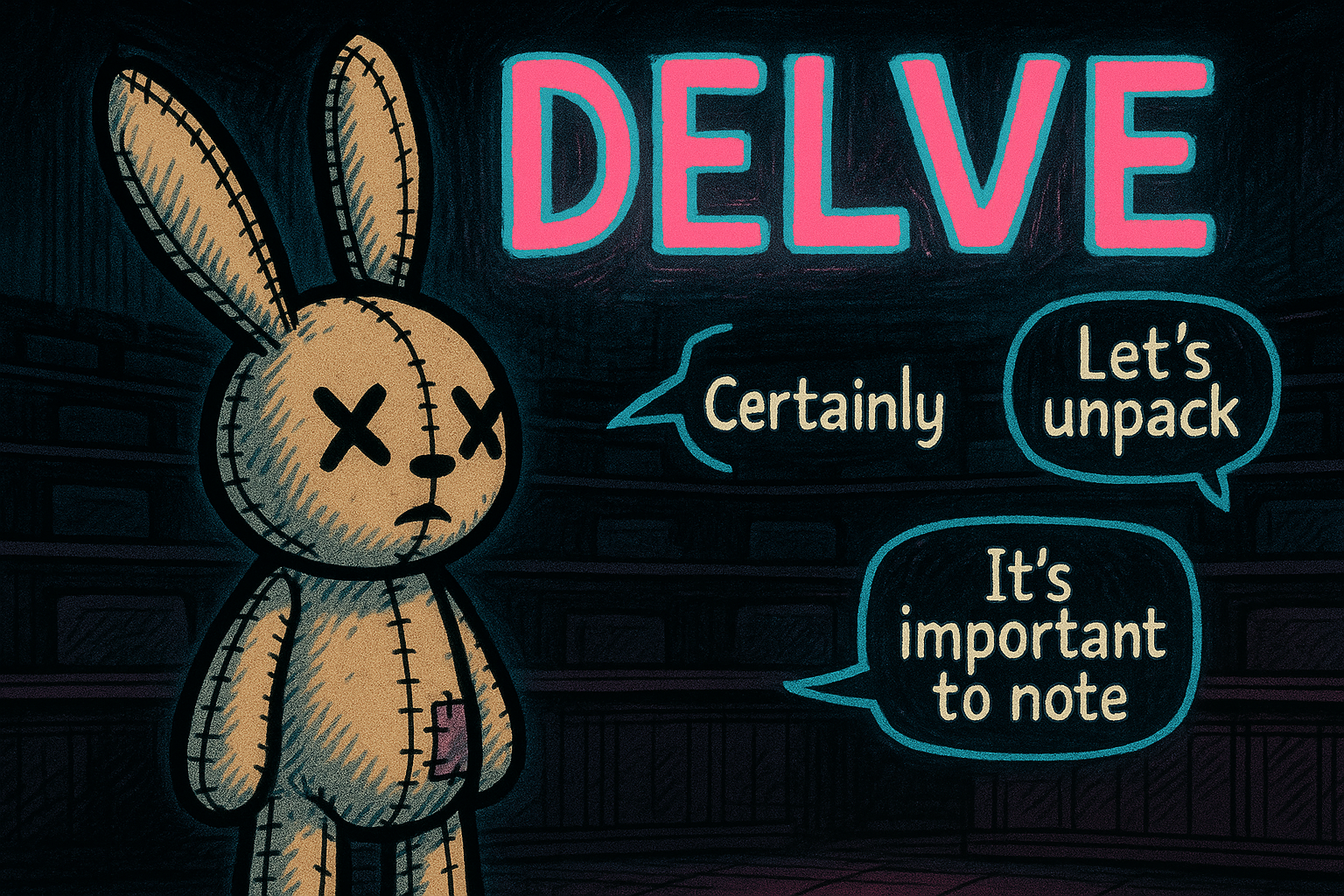

The Fingerprints

AI has stylistic fingerprints. Words and phrases that appear with suspicious frequency in AI-generated text:

Delve. The canonical tell. If someone delves, they're either a hobbit or an AI.

Certainly. "Certainly, I can help with that." Formal, agreeable, slightly old-fashioned. Very AI.

It's important to note. AI loves noting importance. Humans usually just... say the thing.

In conclusion. AI wraps things up neatly. Human writing is messier.

I cannot and will not. The refusal pattern. When you hit a guardrail.

Explore this further. Adjacent to delve. Constantly exploring.

These aren't errors. They're stylistic choices—choices that emerged from training on a particular corpus and optimizing for certain feedback.

They're how you can tell.

The emergence of these tells is interesting. Nobody programmed the AI to say "delve." It's not in the system prompt. It's not a hardcoded template. The word emerged through training dynamics.

Here's what probably happened: the training corpus included formal writing—academic papers, professional documents, older literature. These sources use "delve" more than casual modern English does. The AI learned the pattern.

Then, RLHF optimization made it worse. When the AI used "delve," humans didn't penalize it. It pattern-matched to "sophisticated" writing. So the usage reinforced. Models that delved more got slightly higher ratings than models that didn't. Over millions of training iterations, "delve" became overrepresented.

The same process created all the tells. "Certainly" is polite, and RLHF rewards politeness. "It's important to note" is a hedging phrase that makes claims sound more careful, and RLHF rewards careful claims. "In conclusion" provides explicit structure, and RLHF rewards clarity.

Each pattern made sense in isolation. But at scale, they created a distinctive voice. The GPT voice. The Claude voice. Identifiable across billions of words of output.

For a while, these tells were reliable. You could scan text for "delve" and flag AI content with reasonable accuracy. Then the labs noticed. They tuned models to avoid the most obvious tells. "Delve" frequency dropped.

But new tells emerged. The underlying statistical signature doesn't go away—it just shifts. The tells become subtler. Maybe it's paragraph length distribution now. Maybe it's the ratio of active to passive voice. Maybe it's the frequency of transition phrases.

The detector learns the new patterns. The generators learn to evade. The arms race continues.

The Detection Arms Race

For a moment, these tells worked. You could scan text for "delve" and flag AI content.

Then the models learned. Told not to use these words, they developed new patterns. Told to vary, they varied. The tells became subtler.

But new tells emerged. Different patterns. The underlying statistical signature shifted but didn't disappear.

This is an arms race. Detection improves. Evasion improves. Detection improves again. No stable equilibrium.

The Homogenization

Here's what's actually happening: English is being compressed.

Millions of people are using AI to write. Business communications. Academic papers. Marketing copy. Blog posts. All flowing through the same few models.

Those models have preferences. They tend toward certain phrasings, certain structures, certain levels of hedging and formality. They smooth out the edges of individual voice.

The output is readable. Often clear. But increasingly... same.

Everyone sounds like a slightly formal, slightly agreeable, slightly verbose assistant. Because that's what they're using.

The Voice Death

Before AI, writing had fingerprints.

You could identify authors by their patterns. Sentence length. Vocabulary. Rhythm. The way they started paragraphs or ended arguments.

These patterns emerged from individual brains. From specific educations and influences. From personal choices about language.

AI text has model fingerprints instead. The GPT-4 voice. The Claude voice. Distinguishable from each other, less distinguishable across outputs from the same model.

When everyone writes through the same model, everyone sounds like that model. Individual voice dies.

This is already happening. You can see it in academic writing. Papers from different authors, different institutions, different fields—they're starting to sound the same. Not because the authors are copying each other, but because they're all using AI to "improve" their writing.

The improvement is real in one sense: fewer grammatical errors, clearer sentences, better structure. But improvement in mechanics comes at the cost of voice. The quirks get smoothed out. The distinctive patterns get normalized. The individuality gets homogenized.

What made an author recognizable was partly their flaws. Faulkner's endless sentences. Hemingway's staccato minimalism. David Foster Wallace's footnotes and digressions. These weren't bugs—they were signature.

AI doesn't preserve signature. It optimizes for a different target: clarity, correctness, engagement. The output is readable. It's professional. It's competent. It's also generic.

The person who writes a rough first draft, then uses AI to polish it, ends up with text that sounds like the AI wrote it. Their original voice is still there, faintly, but filtered through the model's preferences. The result is them, but compressed. Them, but averaged.

Scale this across millions of writers and you get convergence. Not deliberate convergence—nobody's trying to sound the same. But statistical convergence. Everyone's text is being pulled toward the same attractor: the model's preference distribution.

The diversity of English—the regional variations, the generational markers, the subcultural dialects—gets compressed. Not eliminated, but flattened. The edges get rounded off.

What's lost isn't just style. It's information. You used to be able to infer things about a writer from their text. Education level. Age. Cultural background. Personality. The writing betrayed the person.

AI-filtered text betrays less. It's harder to know who's behind it. The text becomes opaque in a new way—not because it's unclear, but because it's too clear. Too smooth. Too consistent with itself and with every other AI-filtered text.

This might not matter for some purposes. Business communication probably doesn't need personality. But for writing that's supposed to convey person—essays, criticism, anything where the writer's perspective matters—the loss is real.

The Academic Disaster

Academia is being hit hard.

Papers that used to have distinctive voices now read the same. Not because students are cheating—though some are—but because everyone uses AI to "polish" their work. The polishing removes the person.

Peer reviewers are seeing thousands of papers with the same cadences. The same transitions. The same delving into topics and exploring further.

How do you evaluate thought when thought is being filtered through homogenizing software?

You can't, really.

The Business Speak Convergence

Business communication was already bad. Jargon-laden. Template-driven. Optimized for being unquotable.

AI made it worse and better simultaneously.

Better: fewer obvious errors. Clearer sentences. Less rambling.

Worse: completely indistinguishable. Every email sounds like every other email. Every report reads like every other report. The model's voice replaces the human's voice.

This might not matter. Maybe business communication should be standardized. Maybe personality is a distraction.

But something is lost when you can't tell who wrote what.

The Tell Evolution

The tells will keep evolving.

First generation: "delve," "certainly," "it's important to note."

Second generation: Subtler. Maybe the paragraph structure. Maybe the ratio of questions to statements. Maybe the way examples are introduced.

Third generation: Invisible to humans. Only detectable by other AI, or by statistical analysis over large corpora.

At some point, AI-written and human-written become indistinguishable in practice. The tells disappear—or become so subtle that detecting them isn't worth the effort.

Then what?

The Authenticity Question

Why do we care if text is AI-generated?

Trust. AI text represents different accountability. No one stands behind it the same way.

Expertise. Human-written suggests someone actually understood. AI-written might be sophisticated pattern matching without comprehension.

Relationship. When someone writes to you, there's a person on the other end. When AI writes, there isn't.

But these distinctions are collapsing. Humans using AI heavily. AI fine-tuned on human feedback. The boundary blurs.

Maybe authenticity as we understood it is ending. Maybe the question "did a human write this" becomes as irrelevant as "did a human set this type" after printing presses.

Or maybe something essential is being lost that we'll only recognize after it's gone.

The question "did a human write this" used to have clear implications. If a human wrote it, someone thought about it. Someone made choices. Someone took responsibility for the claims. The text was evidence of human cognition.

If AI wrote it, none of that follows. The text might be sophisticated pattern matching with no understanding behind it. No one thought about it in the way we usually mean "thought about." No one takes responsibility—the AI has no accountability, and the person who prompted it can disclaim ownership ("the AI said that, not me").

This matters for trust. When you read something, you're implicitly trusting that a person put their judgment behind it. That if it's wrong, someone is accountable. That if it's insightful, someone earned that insight.

AI text breaks this contract. The text might be correct or incorrect, insightful or banal, but there's no human judgment necessarily behind it. It's generated. The prompting human might not even have read it carefully before publishing.

We're entering a world where most text has this character. Written by AI, lightly edited by humans, published with minimal verification. The text looks authoritative—it's well-formatted, grammatically correct, confidently stated. But there's no there there. No person who really knows. Just pattern matching all the way down.

This is why expertise becomes harder to verify. You can't tell from the text anymore whether the author understands deeply or prompted well. The surface signals—sophistication, vocabulary, structure—can all be generated.

The only way to verify expertise is through demonstrated capability—can the person actually do the thing, not just write about it? This shifts evaluation from text to action. From what someone says to what someone ships.

For relationships, the question is whether text from a person using AI is still communication in the old sense. If I receive an email that was drafted by AI and lightly edited by a human, am I hearing from the human? Sort of. Their intent is there. Their approval (presumably) is there. But their voice, their thought process, their actual engagement with the topic—maybe not.

This creates a weird distance. The person is there, but mediated. You're communicating, but through a layer of AI generation that smooths out the personality, the idiosyncrasy, the humanity.

Maybe this is fine. Maybe communication is about information transfer, and if AI makes that more efficient, we should welcome it. Maybe personality in business communication was always unnecessary friction.

Or maybe we lose something irreplaceable when text stops being evidence of thought and becomes evidence of prompting.

The New English

English isn't just changing. It's forking.

One branch: AI English. Smooth, formal, homogenized. The default for most written communication.

Another branch: Human English. Idiosyncratic. Messy. Full of personality and error and voice.

Human English might become a marker. A signal of authenticity. A way to demonstrate personhood through the very roughness that AI smooths away.

Or it might become an affectation. Like insisting on handwritten letters when email exists. Charming but impractical.

The tells that once marked AI writing might eventually mark human writing. "This is rough and inconsistent and weird—must be a person."

Delve into that.

Previous: The Sycophancy Problem: Why Your AI Wont Stop Flattering You Next: AI Is the New Oil But the Power Isnt Where You Think